Workload Distribution Strategies for Different Computing Environments

Workload distribution is a vital component in building a future-proof cloud architecture that aligns with your business objectives. Where you place your workloads could be the difference between how performant, scalable, and cost-effective your architecture is.

Optimal workload distribution can be the difference between a highly efficient and scalable cloud and a cloud architecture that is bleeding money and leaves you exposed with regard to regulatory compliance.

The cloud repatriation trend is significant for cutting cloud-related costs and for exercising more control over mission-critical workloads and ensuring legal compliance.

Agile, cloud-smart architectures are the way to future-proof your cloud strategy amidst escalating cloud costs, vendor lock-ins, and compliance requirements.

Moving back some workloads on-prem may seem like the simplest solution. But for some organizations, a multi-cloud strategy may be the best way forward.

Hybrid is increasingly becoming the glue connecting different cloud options, as organizations move away from single-cloud solutions.

Even with a fully cloud-on-prem setup, it’s easy to burst into the cloud when you need more resources for storage, compute, or networking.

Workload distribution therefore becomes critical for highly optimized architectures. Rackspace’s The 2025 State of Cloud Report defines “Cloud Leaders” as tech leaders who have fully integrated cloud into their organizational strategy and aligned it with their business objectives.

54% of these cloud leaders perform a thorough workload-by-workload analysis before deciding where to host their workloads. 38% of other tech decision-makers only analyze critical workloads.

In this article we explore how companies are currently distributing their workloads.

We talk about:

- the role hybrid plays in workload distribution,

- how organizations are currently distributing workloads,

- the rising role that colocation centers are playing,

- what determines where to place workloads,

- workload strategies that companies are using presently.

In conclusion, we present some calculations to illustrate the impact of workload distribution.

Current workload distributions

Hybrid continues to play a key role in cloud strategies and workload distribution. It is a key connector as organizations pull back from single-cloud options.

Colocation centers provide the infrastructure that can support different cloud strategies, allowing for scalable, resilient, flexible, and cost-efficient cloud architectures.

The role of hybrid in workload distributions

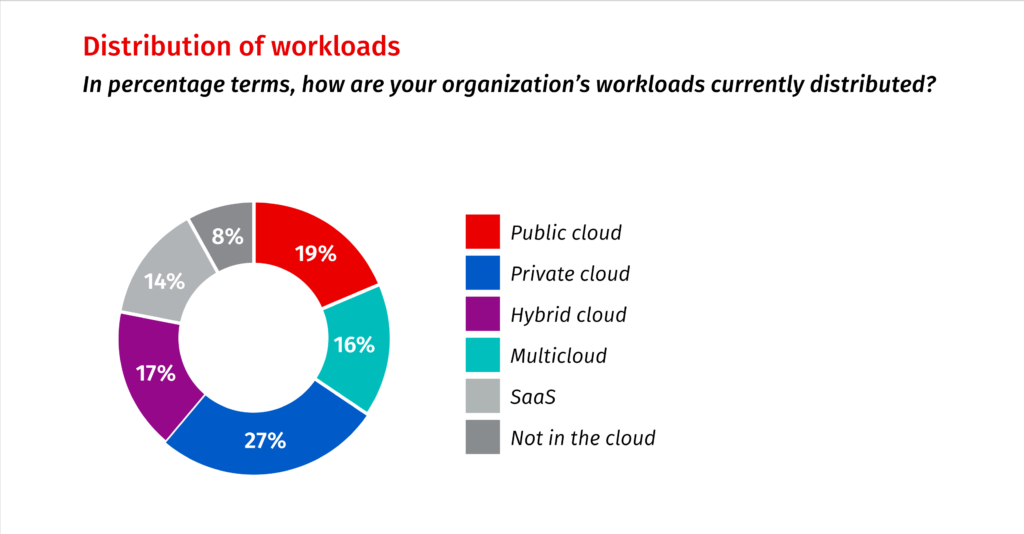

While only 17% of the surveyed tech leaders in the Rackspace report use a hybrid strategy, interest in hybrid is growing. 22% said they are planning to go hybrid. Coresite’s 2025 State of the Data Center report shows that 98% of the surveyed IT leaders have adopted a hybrid strategy or plan to.

Hybrid integrates different environments across public, private, and multi-cloud, helping businesses build scalable, vendor-agnostic, and flexible cloud architectures. If you have a multi-cloud strategy, hybrid creates the integration framework among different cloud providers.

Hybrid helps take advantage of both hyperscaler and private cloud strengths.

82% of tech leaders who have implemented hybrid clouds reported satisfaction in their cloud strategies, the Rackspace report highlights.

Hybrid is ideal for organizations that want to optimize performance and minimize latency. Private clouds are ideal if you want to improve reliability while ensuring compliance and control over critical workloads. Public clouds are ideal for scalability.

Hybrid is also ideal if you want to integrate legacy systems into your cloud strategy.

Hybrid serves as a strategy that enables the optimal and efficient distribution of all your workloads within a more comprehensive cloud.

The role of colocation centres in workload distribution

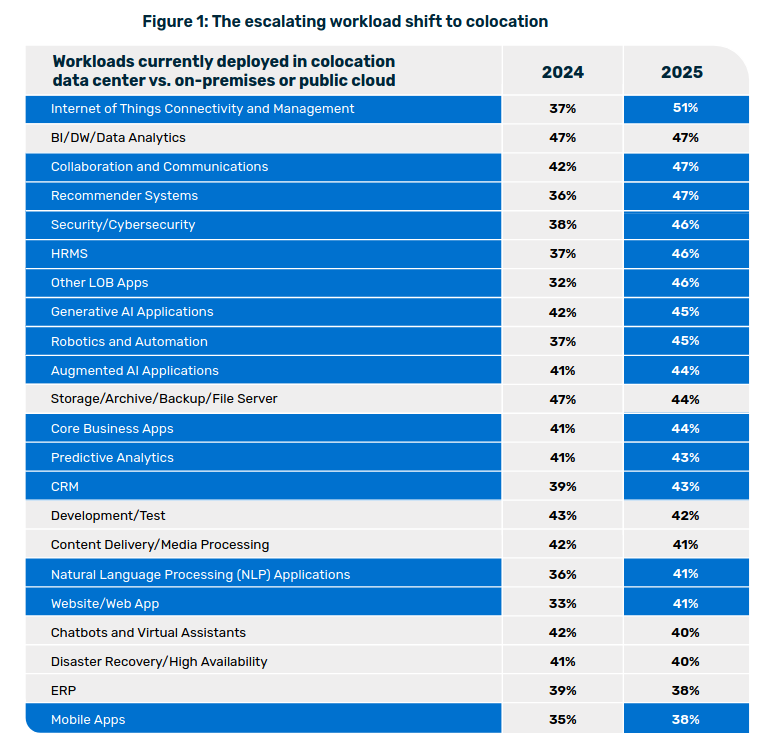

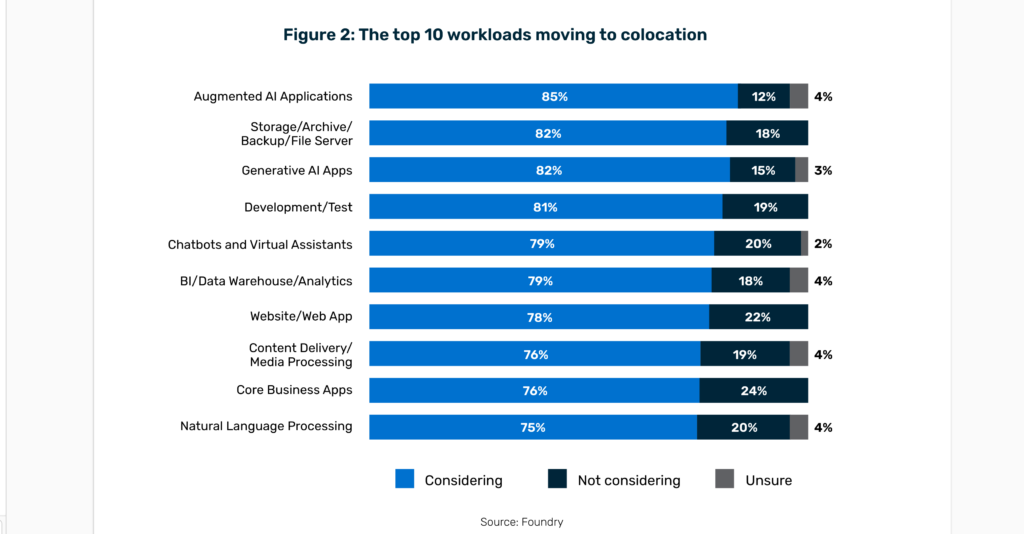

Colocation centers are becoming an increasingly popular option for workload distribution, as they can be cost-effective and give you more control over your workloads.

Businesses are considering repatriating their public cloud workloads to colocation centers.

Colocation centers are a fantastic option for a multi-cloud strategy, as they provide cross-connection between different cloud providers and their services.

They also make it easier to implement hybrid clouds, as they act as the orchestrator between on-prem and cloud environments.

Data-intensive workloads can easily burst into the cloud for extra resources. A combination of colocation and cloud can result in more affordable data egress compared to data transfers over the public internet.

Colocation centers also help businesses meet regulatory requirements, including those that require that data be stored within specific geographic constraints.

Colocation provides access to multiple vendors who can assist in constructing a cloud architecture that aligns with your business needs and objectives.

What determines where to place workloads

Several factors come into play when deciding how to distribute workloads. These are not final, as cloud architectures differ from company to company. They are an excellent start, though.

Network connectivity and latency

The infrastructure that is used to run critical workloads could determine where to place them. For example, medical equipment that’s essential for life support or that’s used in telesurgery in a healthcare context could be on-prem, with proper backup, security, resiliency, and high availability built in.

Workloads that could fail in the event of an internet failure should be on-prem too. Real-time workloads and high-performance computing workloads could benefit from reduced latency on-prem.

Depending on the organization and the industry it serves, mission- and business-critical workloads can be placed in the cloud, especially where latency is not a big concern.

Cost

Predictable workloads that consistently use the same amount of resources could work with on-prem. Workloads whose resource requirements vary and need to scale up or down could benefit from the flexibility that cloud provides.

Workloads that are not optimized for the cloud would end up driving cloud costs higher and higher. These include legacy systems that could be extremely difficult to re-architect.

Workloads that could eventually result in cost savings could be repatriated on-prem or at a colocation center. The initial hardware costs would be greater, but the cost savings would be seen over time.

Data-processing-intensive workloads, like streaming data, are better suited for on-prem, as data processing costs could quickly add up.

Control and trust

Some organizations are more comfortable with having full control and ownership over particular workloads instead of relying solely on a public cloud provider.

If there are workloads that the internal team prefers to manage themselves, then those should be on-prem.

Organizational human resources could also determine how much control is required. Bigger organizations may have the manpower to run workloads on-premises. Smaller organizations lack this advantage.

Compliance and regulation

Some workloads will have geographical limits as a result of regulatory and compliance issues. Those should be on-prem or colo.

Some workloads may require very specific licensing hardware requirements that only make sense on-prem.

Skills

Workloads that require specialized skills could be on-prem or cloud, depending on whether an organization is able to access the skills internally or from a vendor or group of vendors.

Workload distribution strategies

Workload distribution strategies vary from business to business. It’s therefore hard to create an authoritative workload distribution template.

Access to skills and resources play a key role in determining the workload strategy to implement. Let’s explore some of the ways that companies determine how to distribute workloads in cloud computing.

Comprehensive workload-by-workload analysis

Workload by workload analysis is quite thorough and would prove to be the most effective approach to building a flexible, scalable, and cost-effective cloud architecture. This approach works best if a business has the resources to do this internally or work with a vendor or vendors.

Critical workload analysis

Analyzing only critical workloads might be more manageable for companies with fewer resources. To control cloud costs, this approach necessitates an efficient FinOps strategy.

Overarching criteria and rules

Another approach is to create rules or criteria for determining where to place workloads. For example, you could have a rule that all critical workloads are to be on-prem or in a colocation center. Or that data-processing-intensive workloads should be on-prem but burst into the cloud for extra resources.

Ad hoc workload distribution

You could take an ad hoc approach to workload distribution, though you should strive to streamline your placement as soon as you can.

Occasionally, the urgency to complete tasks may lead to reactive choices that manifest as unaffordable cloud expenses.

Whatever approach you take in your workload distribution, the important thing is to periodically analyze your architecture and keep optimizing it until it is fully aligned with your business goals.

The math informing workload distribution

The last piece of the puzzle in workload distribution is financial. What does the math actually look like?

It, of course, depends. A great place to start would be with your cloud bill. What is your largest cost contributor? Or what can you eliminate entirely or reduce significantly by moving back on-prem, adopting a multi-cloud strategy, or going hybrid?

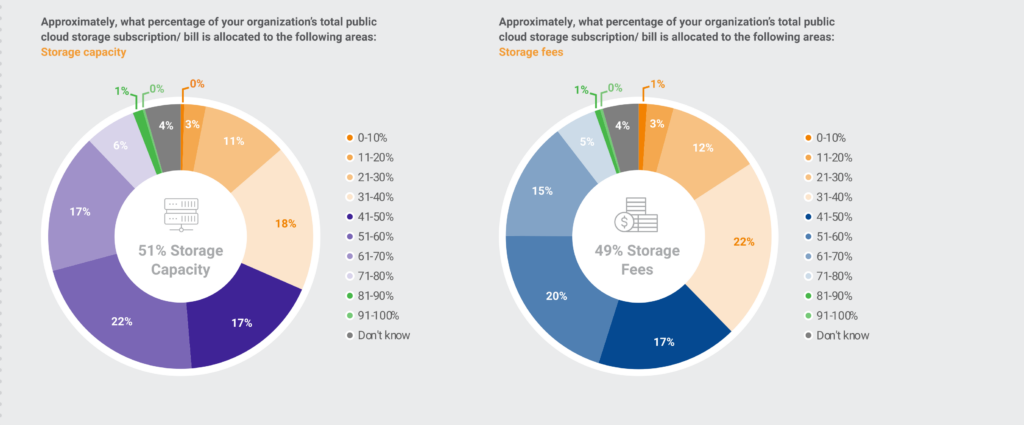

According to the 2025 Wasabi Cloud Storage Index Report, 49% of the cloud storage bill goes to storage-related costs (API calls, data operation fees, and data retrieval), rather than storage capacity.

If you work in BFSI (Banking, Financial Services, and Insurance), you may want to have critical workloads on a private cloud, on-prem, for example.

Some of the data would be infrequently accessed, so it would need archival cold storage. Write-heavy or critical workloads would also work well for such a setup.

If you are a small business that needs reliable shared storage but can handle brief outages for maintenance or repairs, you can work with Brian Moses’ DIY NAS: 2025 Edition setup.

- 5x Seagate Exos X20 18TB Enterprise HDDs,

- 2x Teamgroup MP44 1TB NVMe SSDs,

- and 1x Silicon Power 128GB Boot SSD

This should give you around 55 TB of usable storage capacity.

You would have a one-time hardware cost, though you would incur power, cooling, and maintenance costs.

If you wanted to achieve a 55 TB storage capacity with the AWS S3 Glacier Flexible Retrieval tier, your storage costs would be $0.00036 per GB per month. So, you’d be paying $198 per month for 55 TB.

S3 Glacier Flexible Retrieval Bulk data retrievals and requests are free of charge.

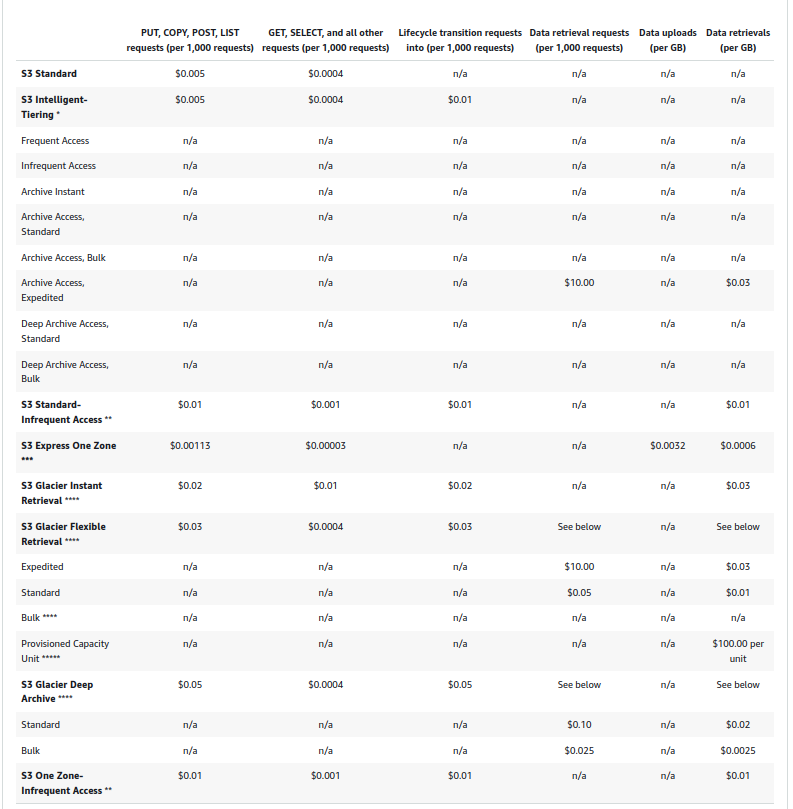

However, there is a requests table showing costs per 1000 requests.

This means that if you choose bulk retrieval, you can access large amounts of data, but it will take between 5 and 12 hours to complete.

If you want to retrieve your data faster, you need to pay for the expedited or standard retrieval options, which use the requests rate in the previous table.

Does it mean that if you miss the bulk data retrieval option, you could end up paying for data that isn’t ‘urgent’?

With this tier, you also get to pay extra for each object’s metadata (40 KB), with smaller objects often costing more. 8KB is charged at standard S3 rates, and the additional 32KB at Glacier Flexible Retrieval rates.

There are also additional costs, depending on when you delete objects. “Objects that are archived to S3 Glacier Instant Retrieval and S3 Glacier Flexible Retrieval are charged for a minimum storage duration of 90 days.”

That means that even if you delete an object before the 90-day period, you’ll still pay the full 90 days for it. You also get a prorated penalty based on normal storage costs, $0.0036 per GB per month.

Let’s now do a more detailed comparison of AWS S3 Glacier Flexible Retrieval vs. on-prem.

| Cost | AWS S3 Glacier Flexible Retrieval | On-prem |

| Upfront costs | ||

| Hardware/setup | $0 | $1,908 (complete system) Storage drives: $1,400 Infrastructure: $508 (motherboard, CPU, RAM, case, PSU, cables) |

| Ongoing Monthly Costs | ||

| Storage fees | $198/month ($0.0036/GB × 55 PB) | $0 |

| Metadata overhead | $5-50/month(40KB × number of objects) | $0 |

| Power costs | $0(AWS responsibility) | $4.17/month (complete system, 43W idle) |

| Retrieval Costs | ||

| Bulk retrieval (5-12 hours) | FREE | Instant access |

| Standard Retrieval (3-5 hours) | $0.55/GB + $0.05 per 1,000 requests | Instant access |

| Expedited Retrieval (1-5 minutes) | $1.65/GB + $10 per 1,000 requests | Instant access |

| Operational Costs | ||

| API Calls/Requests | $0.05 per 1,000 PUT requests $0.0004 per 1,000 GET requests | $0 |

| Lifecycle Management | $0.05 per 1,000 transitions | $0 |

| Early Deletion Penalties | Pro-rated charge for 90-day minimum (Full storage cost even if deleted early) | $0 |

| Infrastructure Costs | ||

| Maintenance | $0 (AWS responsibility) | Drive replacement costs (~$250/drive every 5-7 years) |

| Physical Space | $0 (AWS responsibility) | Requires computer/server to connect drives |

| Year 1 Total Costs | $2,376 – $2,976 (assuming bulk retrieval only) [Storage: $2,376 ($198 × 12), Metadata: $60-600 ($5-50 × 12)] | DIY Complete System: $1,958 [Hardware: $1,908, Power: $50 ($4.17 × 12)] |

This DIY NAS setup is cheaper from the get-go. Additional costs may arise if you require an engineer to set up your architecture.

Over time, assuming the storage needs remain the same, your on-prem costs could literally just be cooling, power consumption, and maintenance. All the while you’ll be spending over $2000 per year just on archival storage on AWS.

Final Thoughts

Workload distribution is a vital component in building a future-proof cloud architecture that aligns with your business objectives. Where you place your workloads could be the difference between how performant, scalable, and cost-effective your architecture is.

Your workload distribution can help you choose whether to use a private or public cloud, a hybrid, or even a combination.

The workload distribution strategy you use is highly dependent on the resources and skills that you have access to.

The important thing is to ensure that you frequently review and optimize your workloads.

We hope that this article serves as a guide to help you distribute your workloads optimally.

PS: Read article as PDF